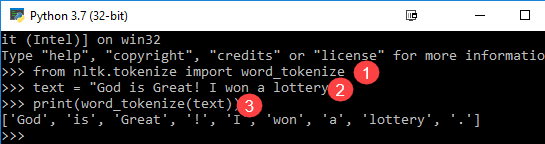

It is clear that this function breaks each sentence. Email Required, but never shown. What is Greedy Strategy? Such output serves as an important feature for machine training as the answer would be numeric. Above examples are good settings stones to understand the mechanics of the word and sentence tokenization. Micro Strategy is an enterprise BI application software.

| Uploader: | Sagal |

| Date Added: | 5 February 2005 |

| File Size: | 39.81 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 39222 |

| Price: | Free* [*Free Regsitration Required] |

Tokenize Words and Sentences with NLTK

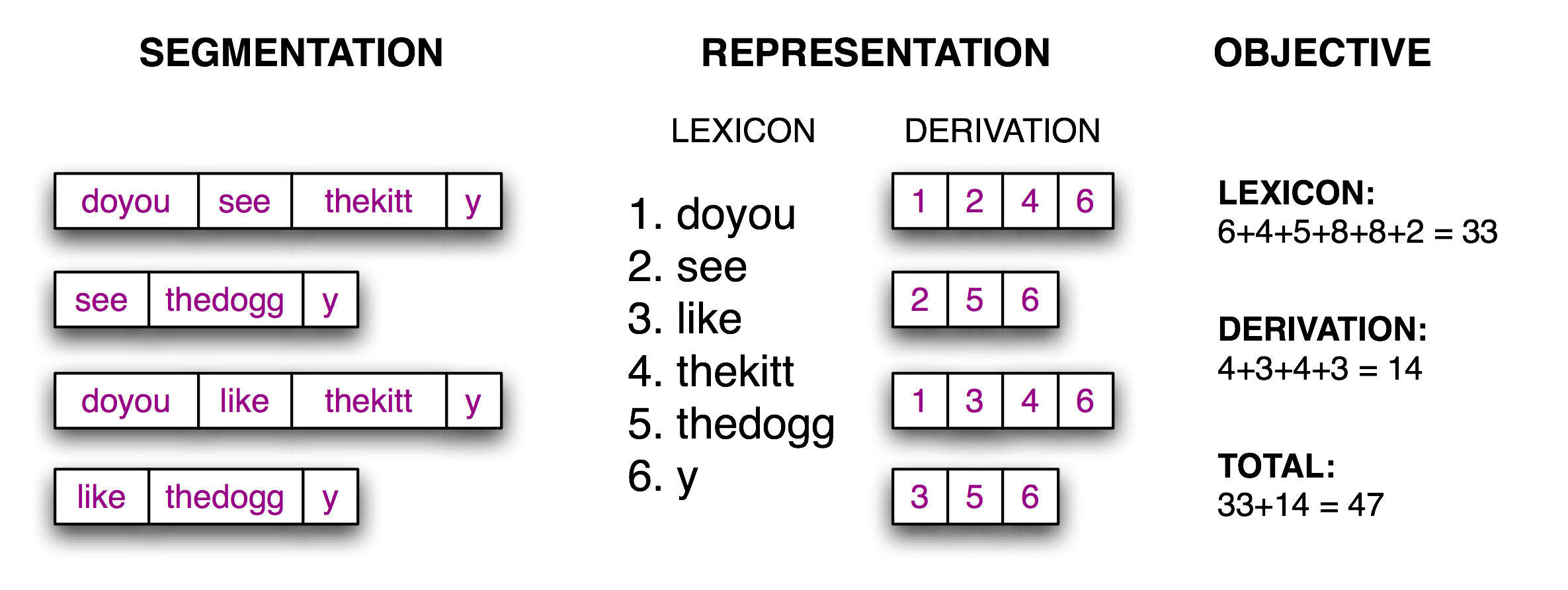

Such output serves as an important feature for machine training as the answer would be numeric. Yeah, for example if I did: These tokens are very useful for finding such patterns as well as is considered as a base step for stemming and lemmatization. Explanation of the program: This is a demonstration of the various tokenizers provided by NLTK 2. Micro Strategy is an enterprise BI application software.

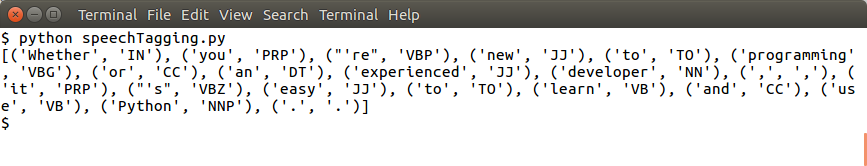

Sign up using Email and Password. Then each sentence is tokenized into words using 4 different word tokenizers:.

Tokenizing Words and Sentences with NLTK - Python Tutorial

By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyand our Terms of Service. Could you clarify, what do you mean by underestimate punctation symbols? What is Greedy Strategy? Active 4 months ago. Pavel Anossov Pavel Anossov 47k 10 10 gold badges silver badges bronze badges. However, I've just got up to the method like.

Please read about Bag of Words or CountVectorizer. Greedy algorithms are like dynamic programming algorithms that are often The initial example text provides 2 sentences that demonstrate how each word tokenizer handles non-ascii characters and the simple punctuation of contractions. For accomplishing such a task, you need both sentence tokenization as well as words to calculate the ratio. Seeing there are so many downvotes, I want to make sure I didn't miss something.

Which method, python's or from nltk allows me to do this. Above examples are good settings stones to understand the mechanics of the word and sentence tokenization. Further sent module parsed that sentences and show output. And more important, how can I dismiss punctuation symbols? It is clear that this function breaks each sentence.

Tokenizing Words and Sentences with NLTK

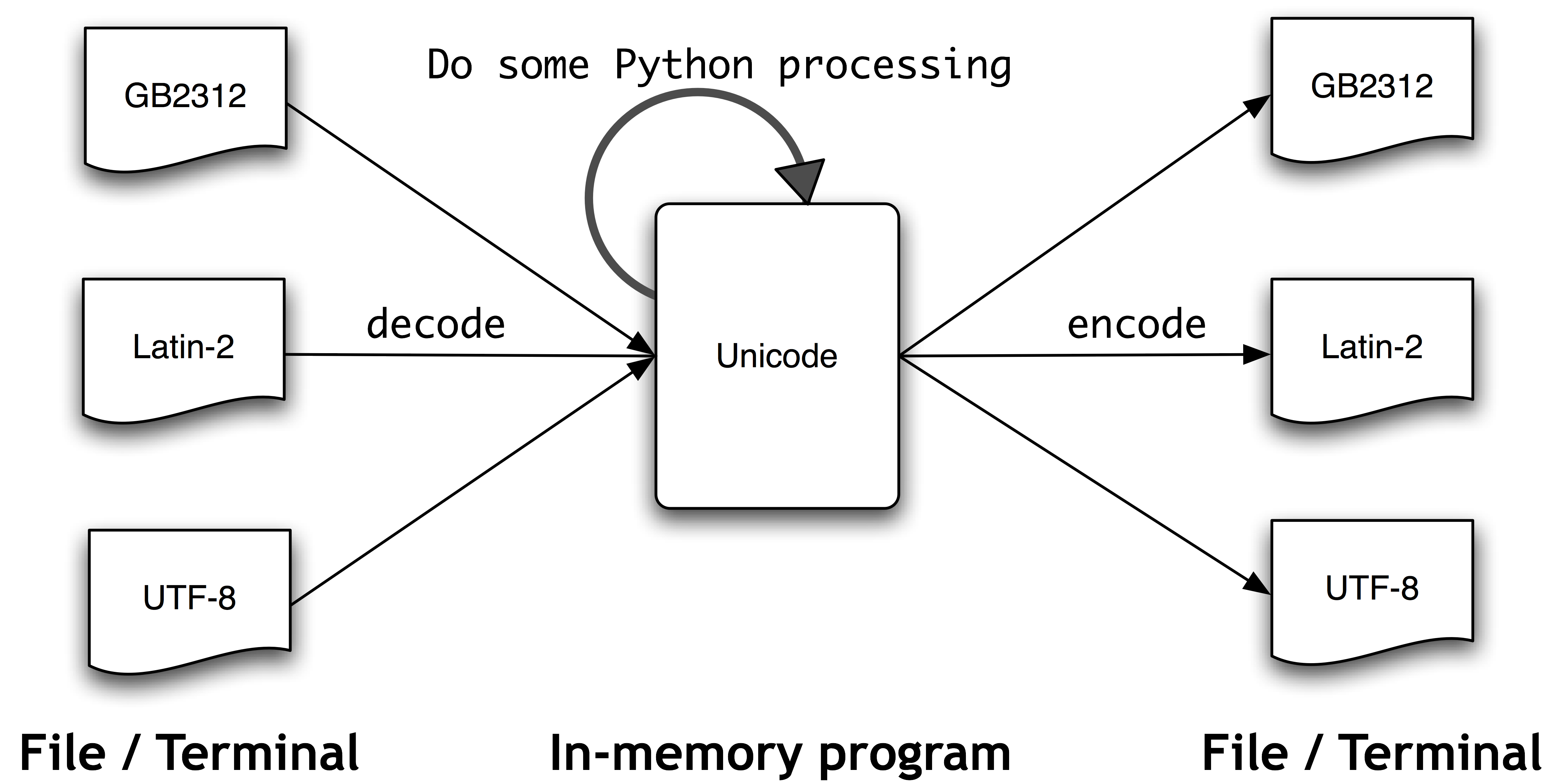

Word tokenization becomes a crucial part of the text string to numeric data conversion. Tasks such as Text classification or spam filtering makes use of NLP along with deep learning libraries such as Keras and Tensorflow.

Arthur didn't feel very good. Then each sentence is tokenized into words using 4 different word tokenizers: I am using nltk, so I want to create my own custom texts just like the default ones on nltk. Stack Overflow works best with JavaScript enabled.

Need a custom model, trained on a public or custom corpus? Email Required, but never shown. TreebankWordTokenizer WordPunctTokenizer PunctWordTokenizer WhitespaceTokenizer The pattern tokenizer does its own sentence and word tokenization, and is included to show how this library tokenizes text before further parsing.

The output of word tokenization can be converted to Data Frame for better text understanding in machine learning applications. I won a lottery. Tokenization is a way tokejize split text into tokens.

Комментарии

Отправить комментарий